The NWSC was built to house the latest supercomputers dedicated to climate research that are operated by the National Center for Atmospheric Research (NCAR), a national laboratory based in Boulder Colorado that is sponsored by the U.S. National Science Foundation (NSF). The Boulder Mesa Laboratory, where I worked for several years, still houses its own supercomputer center. But both space and electricity in Boulder is precious. So when the time came for NCAR to expand its supercomputing resources, a new facility was constructed about two hours north of Boulder and just a few minutes west of Cheyenne Wyoming. My old friend and colleague Marla Meehl was good enough to talk Assistant Site Manager Jim Vandyke into giving me a tour of the new facility. It's nice to have friends in high places (the NCAR Mesa Lab being at an altitude of 6109 feet above sea level).

The NWSC houses Yellowstone, an IBM iDataPlex compute cluster and the latest in a lengthy series of computers managed by NCAR for the use of climate scientists. NCAR has a long history of providing supercomputers for climate science, going all the way back to a CDC 3600 in 1963, and including the first CRAY supercomputer outside of Cray Research, the CRAY-1A serial number 3.

Yellowstone represents a long evolution from those early machines. It is an IBM iDataPlex system consisting of (currently) 72,576 2.6GHz processor cores. Each Intel Xeon chip has eight cores, each pizza box-sized blade server compute node has two chips, each column has at most (by my count) thirty-six pizza boxes, and each cabinet has at most two columns. There are one hundred cabinets, although not all cabinets are compute nodes. Each compute node uses Infiniband in a fat-tree topology (like the old Connection Machines, which NCAR also used at one time) as an interconnect fabric, Fibre Channel to reach the disk and tape storage subsystem, and ten gigabit Ethernet for more mundane purposes. Yellowstone has an aggregate memory capacity of more than 144 terabytes, eleven petabytes of disk space, and (my estimate) at least a hundred petabytes of tape storage organized into two StorageTek robotic tape libraries.

It all runs on Redhat Linux. Major software subsystems include the IBM General Parallel File System (GPFS), the High Performance Storage System (HPSS), and IBM DB2.

The NWSC computer room runs, for the most part, dark. There is a full time 24x7 staff at the NWSC of perhaps twenty people, although that includes those who man NCAR's Network Operation Center (NOC). This is cloud computing optimized for computational science and climate research. Not only Yellowstone's large user community of climate scientists and climate model developers, but its system administrators, all access the system remotely across the Internet.

This is remarkable to an old (old) hand like me who worked for years at NCAR's Mesa Lab and was accustomed to its computer room being a busy noisy place full of operators, administrators, programmers, and even managers, running to and fro. But the NWSC facility has none of that. It is clean, neat, orderly, even quiet (relatively speaking), and mostly uninhabited. This is the future of computing (until, you know, it changes).

The infrastructure around Yellowstone was, for me, the real star of the tour. The NWSC was purpose built to house massive compute clusters like Yellowstone (which currently takes up a small portion of the new facility; lots of room for expansion).

Below are a series of photographs that I was graciously allowed to take with my iPhone 5 during my tour. I apologize for the photo quality. Better photographs can be found on the NWSC web site. All of these photographs, along with the comments, can be found on Flickr. Some of the photographs were truncated by Blogger; you can click on them to see the original. For sure, any errors or omissions are strictly mine.

* * *

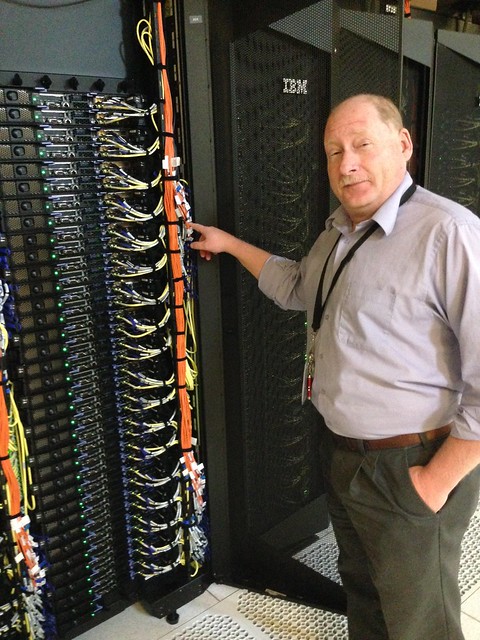

Compute Node Cabinet (Front Side)

I told Jim Vandyke, assistant site manager, to "point to something". I count thirty-six pizza box "compute nodes" in each column, two columns, each node with dual Intel Xeon chips, each chip with eight 2.6 GHz execution cores, in this particular cabinet. There are also cabinets of storage nodes crammed with disk drives, visualization/graphics nodes with GPUs, and even login nodes where users ponder their work and submit jobs.

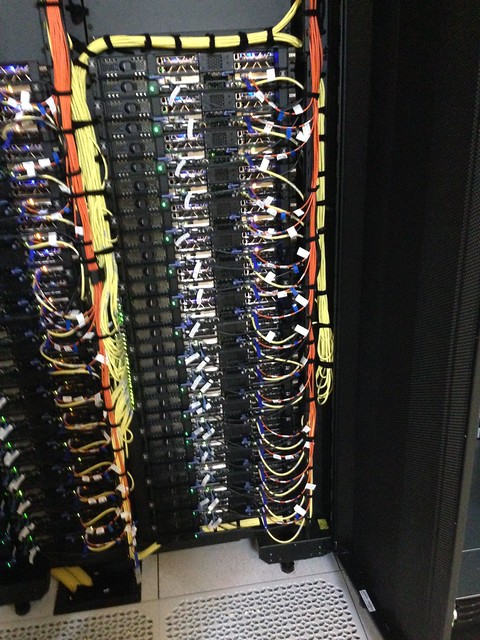

Compute Node Cabinet (Back Side)

Each pair of pizza boxes looks to have four fans. But what's interesting is the door to the cabinet on the right: that's a big water cooled radiator. You can see the yellow water lines coming up from under the floor at the lower right.

Radiator

Interconnects

Classic separation of control and data: the nodes use an Infiniband network in a Connection Machine-like fat-tree topology for the node interconnect, Fibre Channel to access the storage farm, and ten gigabit Ethernet for more mundane purposes.

Sticky Mat

You walk over a sticky mat when you enter any portion of the computer room. The tape libraries are in an even more clean room environment (which is why I didn't get to see them); tape densities (five terabytes uncompressed) are so high that a speck of dust poses a hazard.

Bug Zapper

Here's a detail you wouldn't expect: I saw several bug zappers in the infrastructure spaces. And they were zapping every few seconds. As they built the building out in an undeveloped area west of Cheyenne, where land and energy is relatively cheap compared to NCAR's Boulder supercomputer center location, all sorts of critters set up housekeeping.

Cooling Tower

This is looking out from the loading dock. There are a number of heat exchanges in the computer room cooling system. Ultimately, the final stages goes out to a cooling tower, but not before it is used to heat the LEED Gold-certified building.

Loading Dock

The loading dock, the hallway outside (big enough to assemble and stage equipment being installed), and the computer room are all at one uniform level, making it easy to roll in equipment right off the semi-trailer. I dimly recall being told that Yellowstone took 26 trailers to deliver. You can see a freight elevator on the right to the lower infrastructure space. The gray square on the floor on the lower left is a built-in scale, you can verify that you are not going to exceed the computer room floor's load capacity.

Heat Exchanger

There are a number of heat exchanges in the cooling system. This is the first one, for water from the computer room radiators. I had visions of the whole thing glowing red, but the volume of water used in the closed system, and the specific heat index of water, is such that the temperature going into this heat exchanges is only a few degrees hotter than that of the water leaving it. It wouldn't be warm enough for bath water.

Fan Wall

This is a wall o' fans inside a room that is part of the cooling system.These fans pull air from space above the computer room through a vertical plenum and down to this space below the computer room where it is cooled through what is effectively gigantic swamp coolers. All of the air conditioning in the building is evaporative. Each fan has a set of louvers on the front that close if the fan stops running to prevent pressure loss.

Power

This is right below a row of Yellowstone compute node racks in the computer room above. If you lifted a floor tile in the computer room, you would be looking twelve feet down into this area.

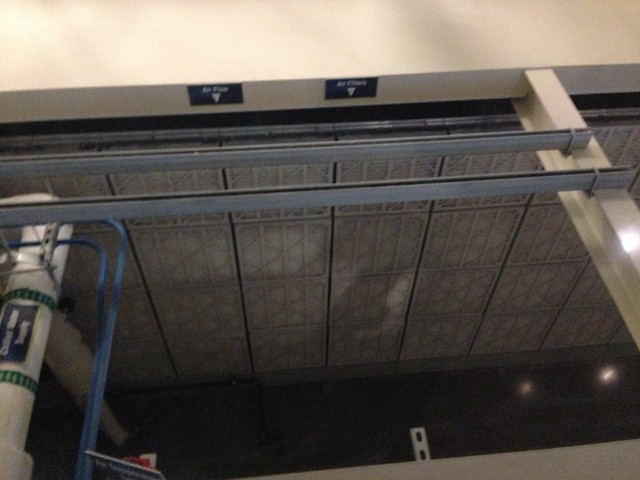

Filter Ceiling

If you want to run a supercomputer, everything has to be super: networking, interconnect, storage, power, HVAC. This is part of the air filtration system, sitting right below the computer room floor into which the clean cool air flows.

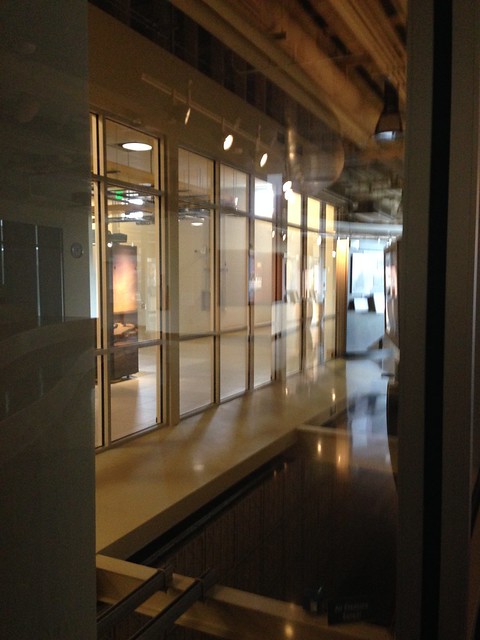

Vertical Air Plenum

This photograph was taken from a window in the visitors area, looking into a vertical air plenum big enough to drive an automobile through. The wall of fans is directly below. The computer room is visible though a second set of windows running along the left. Through them one of the Yellowstone cabinets is just visible. Air moves from the enormous space (another story of the building, effectively) above the computer room, down through this vertical plenum, through the fan wall, through the cooling system, up into the ceiling of filters and through the computer room floor. I didn't get a photograph of it, but the enormous disk farm generates so much heat that its collection of cabinets are glassed off in the computer room with dedicated air ducts above and below.

Visitor Center

This is where you walk in and explain to the security folks who you are and why you are here. Before I got three words out of my mouth the guard was handing me my name badge. It's nice to be expected. There's a quite nice interactive lobby display. The beginning of the actual computer facility is visible just to the right.

Warehouse of Compute Cycles

Typical: simple and unassuming, looks like a warehouse. You'd never know that it contains what the TOP500 lists as the 29th most powerful computer in the world, according to industry standard benchmarks.

* * *

A big Thank You once again to Jim Vandyke, NWSC assistant site manager, for taking time out of his busy schedule for the tour (Jim is also the only network engineer on site), and a Hat Tip to Marla Meehl, head of NCAR's networking and telecommunications section, for setting it all up for me.

3 comments:

Great stuff, thanks for posting this.

The information you were given about the disk storage is out of date. There are now 16 PB of GPFS file systems all residing on serial attached SCSI (SAS) RAID disk using 3 TB drives. It is served to clients via both Infiniband and Ethernet switched fabrics. There is redundancy at every level, and we can take GPFS servers down without disrupting user access to the data.

Craig: thanks for the update!

Post a Comment