For the past forty years I've been keeping my eyes open for a new programming language to do the kinds of things I need to do: concurrent, parallel, asynchronous, real-time, close to bare metal, mobile, embedded, and lately, internet of things. Most of my paying gigs are still in C++ or C. But I've seen more than one ginormous C++ code base that was effectively undebuggable. And as productive as I am in C, my PDP-11 experience means I know it for what it is: a portable structured assembler language.

After a few false starts, I've finally arrived at Go and Rust.

Go - also known by the more search-friendly name Golang - is a language that compiles to machine code, unlike Python or Java. It is a product of Google. Go was invented in part by some former Bell Labs folks even older than I am that were among those responsible for the invention of UNIX and C.

Rust is also a language that compiles to machine code. It is product of Mozilla, the folks that brought you among other things the Firefox browser, and a host of other folks that have formed a Rust community. Rust has been recently growing in popularity, if one is to believe more than one survey of programming languages.

If you want to skip the rest of this article and just peruse some open source Go and Rust code that I believe are reasonably idiomatic - that is, that uses these languages in the way in which they are each intended - you can find my repositories on GitHub. They are both licensed under the

LGPL 2.1.

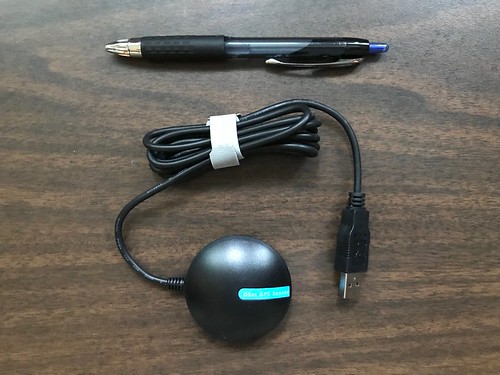

Each repository has a README that tells you how to extract the software from GitHub, build it, run the unit tests, and run the functional test. Both projects have been built, unit tested, and run on both an x86_64 Ubuntu system and an ARMv7 Raspbian system.

I will not teach you Go or Rust in this article. It's not even a

"Hello, World!" kind of tutorial. I will give you a taste of what the languages look like, tell you what I learned about each language, what I liked and did not like, and what I think they would be useful for, as I solved the same problem in each.

Disclaimer: I am neither a Go nor a Rust expert, as will quickly become obvious.

Approach

My close friends and long-time colleagues will confirm that I have my share of personality defects. One of them is that I can only really learn by doing. Only through the laying on of hands (as I like to say) am I able to internalize new knowledge. One of the ways I choose to do this in a new programming language is to implement a non-trivial piece of code. My non-trivial code of choice is the

Generic Cell Rate Algorithm (GCRA).

The GCRA is a rate control algorithm that I first encountered around 1996 when I was writing firmware in C++ at Lucent Technologies for products based on

Asynchronous Transfer Mode (ATM). Implementing the GCRA - a library that implements the algorithm, unit tests, some utilities that use the library, and which can be used as a functional test - captures a lot of the day-to-day nuts-and-bolts experience of using a language: its usability, its support for object-oriented design, its build environment, its run-time library, its debuggability, its testability, its documentation, and so forth. I have implemented the GCRA in C++ (which shipped in a product), Java, C, and now Go and Rust.

Each of the two repositories cited above implements the GCRA in a library (Rust: crate), in the form of several functional units (Go: package, Rust: module), that includes an interface (Rust: trait) called

Throttle that defines the common API, with two derived classes (Go and Rust: struct) named

Gcra and

Contract; a Contract is composed of two Gcra objects, one to limit the sustained rate, the other to limit the peak rate of an event stream. Events are whatever you want to rate limit: bits, bytes, packets, what have you. Unit tests are implemented for all functional units. One unit test simulates an event stream through the implementations. Another unit test generates actual events in the form of bytes in data packets through a test harness with multiple concurrent tasks using each language's native concurrency mechanism. The library is used to implement two utilities (Go: command, Rust: executable) named

fletch and

shape that are in turn used to create a functional test.

Go

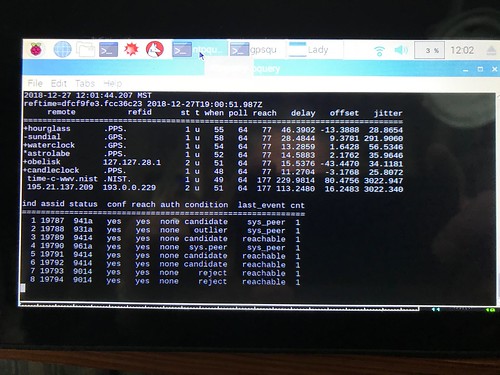

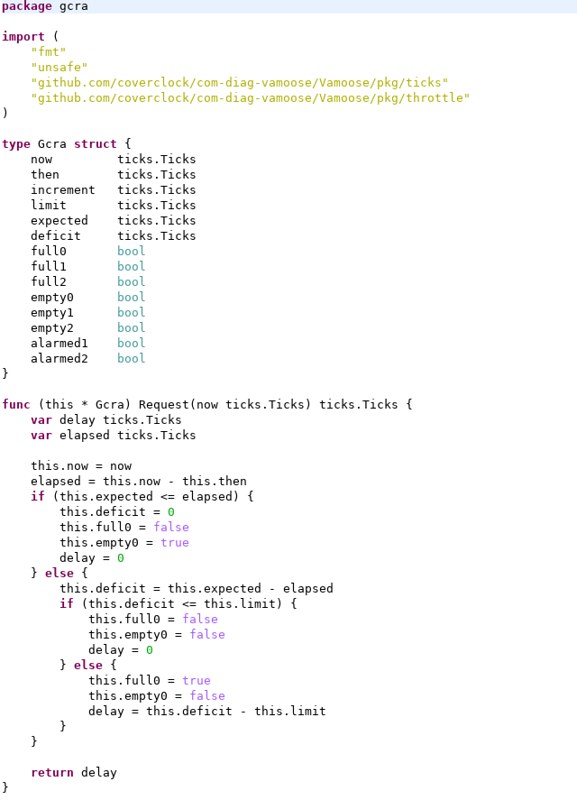

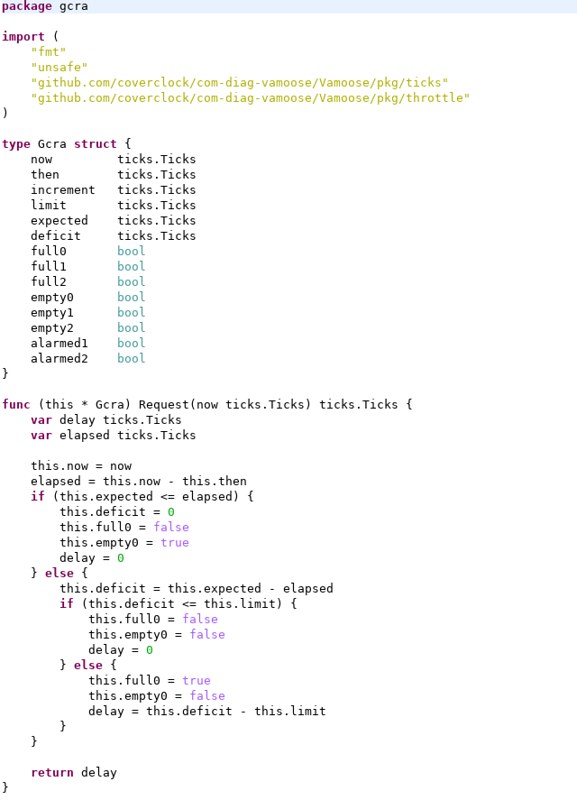

Here is a snapshot taken from the GCRA implementation in Go as it renders in the Eclipse IDE. It is just a code snippet to give you a feel for what different portions of the language looks like. You can click on it to see a larger version.

The

package keyword controls visibility; variables and functions inside a package are visible to functions inside the same package. Access is otherwise controlled simply by capitalizing the name of the variable or function, which makes them publicly accessible.

A class is created by defining a

struct inside the package. (Several classes can be defined inside the same package). Methods for that class are established by defining a function with an explicit object pointer as a kind of argument list separate from the function argument list.

There is no inheritance, but you can define an

interface, which defines method signatures that have no implementation. Interfaces are not associated explicitly with a class but are defined via

duck typing ("If it walks like a duck and quacks like a duck, it must be a duck"): if a class implements all the required method signatures of an interface, it is automatically a subclass that interface. There is however a way to inherit the methods of another class via composition (but I didn't use that in this project).

Like Java and Python, Go is a garbage collected language. Variables may be allocated from either the stack or the heap; the syntax of the language is agnostic to this and the developer has no control over it. The compiler performs

escape analysis at compile time: if the pointer can escape the scope of the code block in which it was allocated, the object is allocated on the heap and is later deallocated by the garbage collector; otherwise it is allocated on the stack and deallocated when it goes out of scope.

Go has arrays, but unlike C and C++ they aren't ambiguously used sometimes as a variable and sometimes as a pointer. Arrays have Go are first class variables with a fixed length. If you want to use a subset of an array, Go provides an operator to define an array

slice: a starting position within an array and a count of the number of elements in the slice. For example: you allocate a buffer as a fixed length array

var buffer = make([] byte, int(burstsize))

but when, for example, you use a standard library function to read data into the buffer, you get back a slice

buffer[0:length]

depending on how much of the array was used.

Go has explicit width integer types, something every embedded developer will appreciate. Unlike C or C++, there is no implicit conversion between integer (or floating point) types. When you do math with mixed types, you must explicitly cast the types to match.

datum[0] = byte(rand.Int31n(int32('~') - int32(' ') + 1) + int32(' '))

The Go compiler complains if you import a package that you don't use, or if you declare a variable that you don't use. While this is irritating at first, it really contributes to cleaner code.

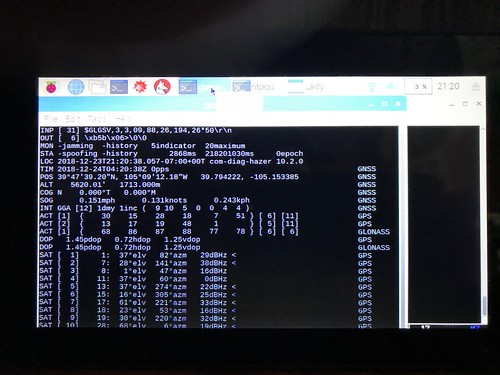

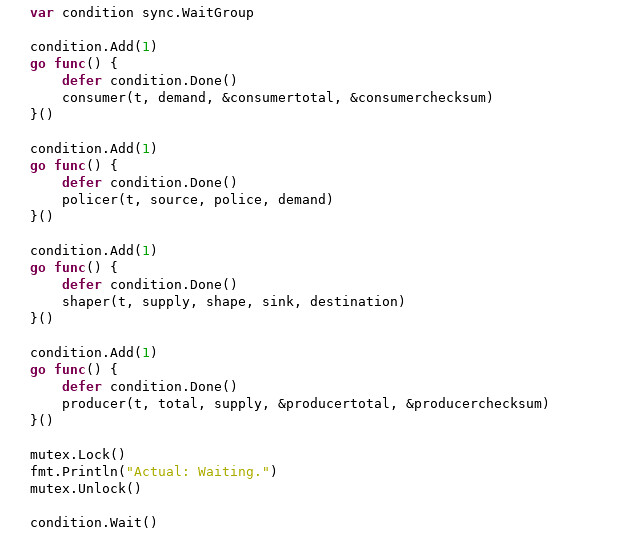

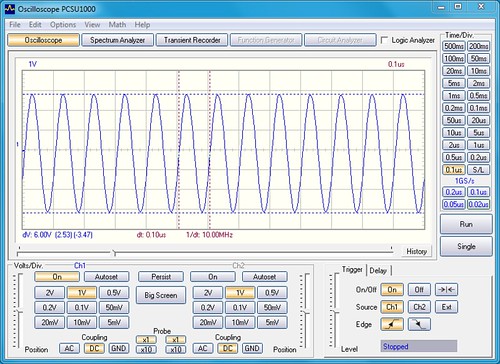

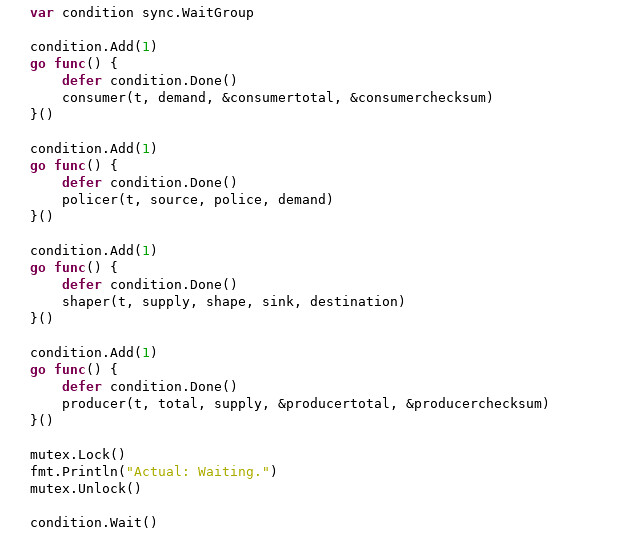

Here is a code snippet that shows how the unit test harness I wrote in Go creates four different concurrent tasks, one to produce a data stream, one to shape it to conform to a traffic contract, one to police it using another traffic contract, and one to consume it.

These concurrent tasks in Go aren't POSIX threads. They are

goroutines, very low overhead

coroutines, all of which run in the context of one or more POSIX threads. Go typically creates a POSIX thread for every logical core on the processor on which the Go program runs. Each thread can multiplex one or more goroutines. Because goroutines are much lighter weight than a POSIX thread, they are much more scalable; a single Go program can consist of dozens, hundreds, or even thousands of goroutines. This allows you to exploit many processor cores by assigning a new goroutine to, for example, every individual incoming network packet, or to every one of thousands of concurrent incoming data streams.

I've implemented this kind of architecture myself in embedded projects, where each coroutine was one state machine among many, all managed by a single thread (a VxWorks task in my specific case). Each state machine handled one among tens of thousands of simultaneous data streams. But I wrote thousands of lines of C++ code to do that; in Go, I could have done it in just a few pages of code.

This is what I think is Go's real

raison d'etre: allowing the developer to exploit large numbers of processor cores by making it easy, even trivial, to write highly parallel or pipelined algorithms.

The

go keyword is used to spawn off a function into a goroutine. Above, the function is defined inline, as a kind of

closure. I use a

WaitGroup, a kind of counting semaphore, to block the main thread of control until every goroutine completes. Inside each goroutine body I use the

defer keyword to register a function that will be automatically called when the function that is the goroutine goes out of scope (essentially exits its terminating curley bracket) for any reason. That function signals the

WaitGroup. Then I call my own function that actually implements the goroutine intended action.

Although I don't show it here, Go has a built-in message passing mechanism called a

channel or chan. My producer and shaper goroutines, and policer and consumer goroutines, communicate over channels. A channel has a type, defining what kind of object it queues, and a depth, defining how many of those objects can be queued before the sender blocks.

My shaper and policer goroutines communicate using UDP sockets. The standard Go library has a comprehensive set of packages that include sockets, encryption, JSON, and other useful stuff.

If you stick to the directory layout expected by the Go tool chain, building a library and applications is as simple as

go build github.com/coverclock/com-diag-vamoose/Vamoose/cmd/fletch

go build github.com/coverclock/com-diag-vamoose/Vamoose/cmd/shape

and running the unit tests is a matter of

go test github.com/coverclock/com-diag-vamoose/Vamoose/pkg/ticks

go test github.com/coverclock/com-diag-vamoose/Vamoose/pkg/fletcher

go test github.com/coverclock/com-diag-vamoose/Vamoose/pkg/throttle

go test github.com/coverclock/com-diag-vamoose/Vamoose/pkg/gcra

go test github.com/coverclock/com-diag-vamoose/Vamoose/pkg/contract

One of the downsides of Go is that it doesn't play well with

valgrind. I'd like to think that with its garbage collector, checking for memory leaks isn't necessary. But call me paranoid. Running

valgrind on a Go application is an invitation to be inundated with worrisome warning messages that probably have nothing to do with your own code.

Rust

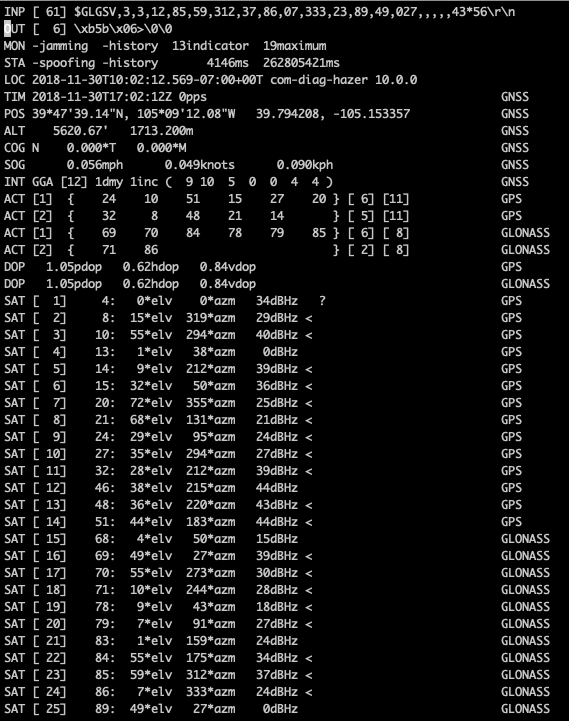

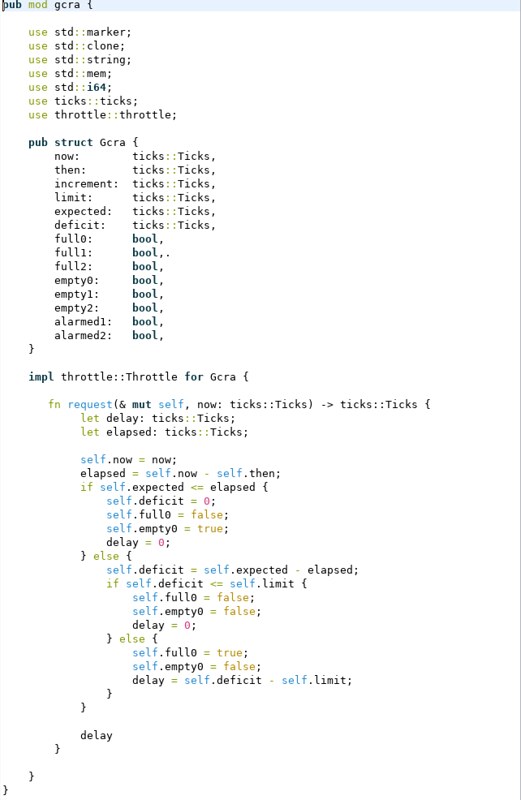

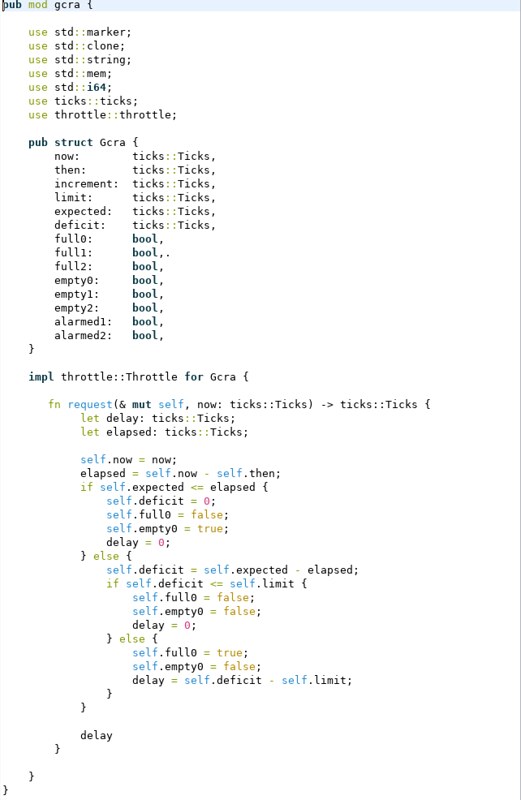

Here is another snapshot taken from the GCRA implementation in Rust as it renders in the Eclipse IDE. It is also just a code snippet to give you a feel for what different portions of the language looks like. You can click on it to see a larger version.

Visibility in Rust is defined by what is inside the same module defined in the

mod code block. Unlike Go, access is controlled using a keyword

pub.

A class in Rust is defined with as a

struct. More than one class can be defined inside the same module. Methods for a class are defined in the

impl code block, and applicable interfaces identified using the post-fix

for keyword.

In Rust, an interface is defined as a

trait. Like Go, Rust doesn't have inheritance, but a trait can implement actual code.

When I write code in C++, I of course use the

new and

delete operators to explicitly allocate and deallocate objects on the heap. I always have to come to grips with when it is appropriate to call

delete. I go through a kind of static code analysis in my head: if I pass this pointer to this function, is it merely

borrowing it (so that it is still the responsibility of the caller to deallocate it), or am I

moving the object to the function (so that it is now responsible for either deallocating it, or passing that responsibility on to someone else).

The Rust compiler does this too, at compile time, through the actions of its

borrow checker. Rust does not do garbage collection. Instead, memory is allocated when the developer defines a variable. Then the compiler tracks that memory reference at compile time, enforcing hard and fast rules as to whether you can pass that pointer to a function, and whether that pass is a borrow or a move. The memory is automatically deallocated when it goes out of the scope in which is was originally allocated, or in which it was moved into.

There are some exceptions to this. There are containers provided by the Rust standard library that are allocated on the heap and which implement reference counts to determine when they can be deallocated. And you can explicitly deallocate memory before it goes out of scope using the

drop operator.

Along with this almost no-cost memory management scheme is a set of rules which are rigidly enforced at compile time: you can have

either one and only one read/write (mutable or

mut) reference (pointer) to an object,

or you can have multiple read-only (immutable) references to an object; and no null references.

Rust implements arrays and array slices very similarly to Go. You allocate a fixed size array

let mut buffer = [0u8; 65536];

and an input function effectively returns a slice

buffer[0..length]

depending on how much data was read in.

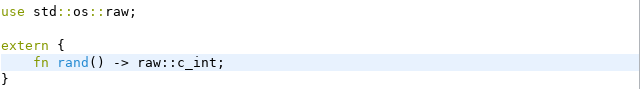

Just like Go, Rust has explicit width integer types, and there is no implicit conversion between integer (or floating point) types. When you do math with mixed types, you must explicitly cast the types to match.

let byte: u8 = ((rand() % (maximum as raw::c_int)) + 1) as u8;

Like Go, the Rust compiler complains if you import a module that you don't use, or if you declare a variable that you don't use, or if you initialize a variable to a value when you declare it and then don't use that value, or even if you have extra parenthesis in an expression (that part really irritates me).

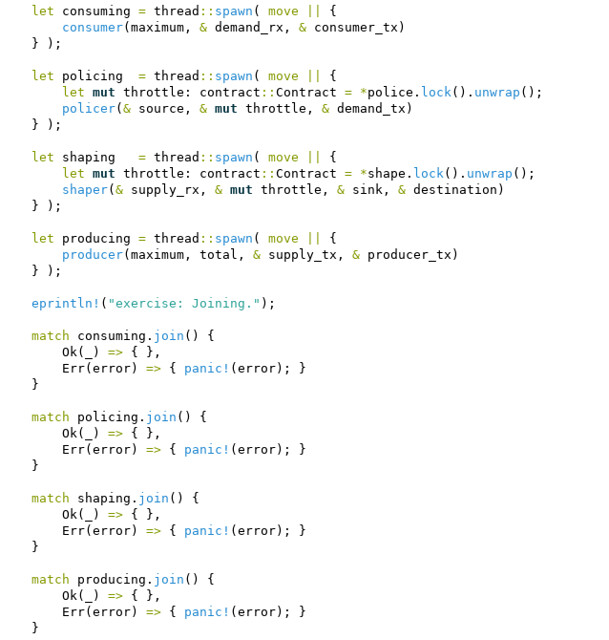

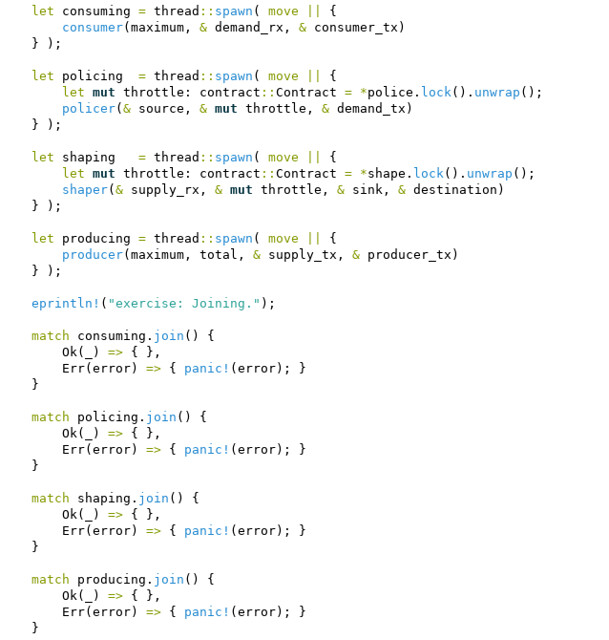

Here is a code snippet that shows how the unit test harness I wrote in Rust creates four different concurrent tasks, one to produce a data stream, one to shape it to conform to a traffic contract, one to police it using another traffic contract, and one to consume it, just like I did in Go.

Rust implements concurrency using full POSIX threads. Above I

spawn off a thread defined as a kind of closure, and each thread calls its producer, shaper, policer, or consumer implementation in the form of a function. The main routine uses a POSIX thread

join to wait until the four threads complete.

In the spirit of the borrow checker described above, the Rust compiler prevents data races between threads by forcing the developer to protect shared resources using a synchronization mechanism. I use a

Mutex, not shown here except for the use of its

lock method; the unlock is performed automatically when the variable goes out of scope. The compiler also forces the developer to allocate shared data on the heap, also not shown here except for its use of the

unwrap method which returns a pointer to the object from its heap container, an

Arc (for Atomic reference counting) in this case. The use of a reference counted container allocated on the heap prevents the object from being deallocated when its original reference goes out of scope in the main thread (which can exit before the child thread), and instead is deallocated when its reference count goes to zero. Like the borrow checker, this is all enforced at compile time.

The Rust standard library also provides the

channel as a message passing mechanism, and this implementation uses them in a very similar way to how I used them in Go. UDP sockets are also used similarly to that in the Go implementation, and are provided by the Rust standard library.

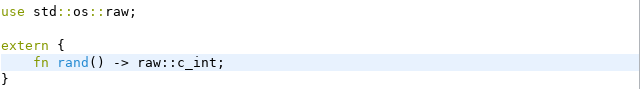

One thing that I couldn't find in the Rust standard library was a random number generator, which is needed by my unit test harness. But one of the things I really liked about Rust was how easy it was to interface my code to the standard C library and call its random number function. Go has a way to do this as well, but it's not nearly as straight forward (but then I didn't need to use it in Go).

If you stick to the expected directory layout, building a Rust library and applications can be done by

cargo build

and running the unit tests is as simple as

cargo test

My Rust applications played just fine with

valgrind.

Remarks

I found Go very intuitive to use. Perhaps that was because it was inspired by

Communicating Sequential Processes (CSP), a formal language for describing concurrent programs developed by British computer scientist Tony Hoare in 1978, and I recall reading his original paper in graduate school decades ago (and I have his book on CSP around here somewhere).

But more to the point, Go is an excellent fit for the post-Moore's Law world in which processors aren't getting faster, but are providing a lot more execution cores; in which if you want more performance, you need to parallelize your code.

Mostly I think Go is easy to use because it was designed by some pragmatic and experienced software developers who wanted a language in which they could get some work done.

Rust has a well deserved reputation for having a steep and high learning curve. What I did in

days in Go took me

weeks in Rust. It reminds me of a comment a colleague of mine made decades ago about the

Ada programming language: "If you can just get your program to compile, it frequently works the first time." I was sharing my Go and Rust experience with a more contemporary colleague - who has a Ph.D. in physics and had worked at

Fermilab - who has also used both languages, and he darkly remarked "I may not be smart enough to use Rust." Which, as we all know, is code for "seems overly complicated". But we both loved Go.

On the other hand, I probably won't be writing any device drivers, hard real-time algorithms, or micro controller firmware - tasks that are absolutely in my wheelhouse - in Go. Its background garbage collector would make that problematic. But I sure would be tempted to do so in Rust. Rust eliminates entire classes of errors regarding memory leaks and data races by simply eliminating your ability to write code with those bugs. Along the way, it eliminates common - and legitimate - design patterns I've used for years with concurrent code. It's Rust's way or the highway. But maybe that's okay.

In addition to its learning curve, a big complaint I have about Rust is that it's under documented. Despite the books and web sites to which Rust aficionados will point you, many of its features are undocumented, and the examples either don't work (because of recent changes in the language) or are too simple to be useful. That makes the Rust learning experience painfully full of reverse engineering and trial and error.

(One of the tricks I learned with Rust was to code a call to some function(s) in the standard library and assign the result to something like an integer variable. The type mismatch error message from the compiler would include the fully qualified type name of the function return. That was often more useful than what little documentation existed. Important safety tip: the "suggestions" made by the compiler were typically not really what I needed to do.)

If you are tempted to use Rust, you have to decide on the economic trade off between climbing the Rust learning curve versus writing in C or C++ and just avoiding making the kinds of mistakes that Rust eliminates. Those of us that have been writing large systems in C or C++ for decades already know how to do that. But since we're all so old as to almost be dead, Rust might be just the thing for the men and women that replace folks like me.

Update 2018-11-15: one feature both Go and Rust have that put them way ahead of C and C++: they do array bounds checking, made possible by the lack of confusion between arrays and pointers. This is a huge win, not just from a reliability point of view, but security as well: no more buffer overflows. That is probably reason enough to use Rust over C or C++.

I don't see Go and Rust as competitors. I believe that every programming language can be considered a

domain specific language, and this is true of Go and Rust. The design of every programming language makes a different compromise in its choice between performance, usability, applicability, and so forth. And every successful programming language survived because it found a niche for which it was unusually well suited. There is no programming language that can fulfill all needs for all people. That's why everything isn't written in Lisp or Smalltalk. But while I might well use Rust for the really low-level stuff, I am pretty sure I could happily write everything else in Go.