In "First-Come First-Served Readers and Writers in C using Monitors", I wrote about my solution to the classic Readers-Writers concurrency problem, in C using POSIX threads. My solution maintained the multiple-reader/single-writer invariants, but enforced access to the shared resource (whatever it was) in strict time order: readers could access the shared resource concurrently providing a writer had not requested accesses ahead of them. While POSIX threads provides its own Readers-Writers lock solution - see pthread_rwlock_rdlock(3) and pthread_rwlock_wrlock(3) - strict time ordering is not necessarily guaranteed.

My diminuto_readerwriter code, part of my Diminuto C-based systems programming library that I've been maintaining and expanding since 2008, worked fine for what I needed at the time. But it had one flaw that prayed on my mind: it required that the application provide an array that the algorithm could use in which to store state. This wasn't a big deal, except that sizing the array was sometimes not straightforward. And it just seemed awkward. Since I was thinking of using the Reader Writer code for another purpose, I finally decided to fix that.

I replaced the array - what the API referred to as the ring buffer - with a diminuto_list (or just List), a doubly-linked-list structure provided by the library, for what the API now refers to as the wait list. The root of the List was part of the structure that defined the diminuto_readerwriter (or just Reader Writer) lock object. Each thread now has a List node that is automatically dynamically allocated on first use and stored in the thread's thread-local storage (see pthread_key_create(3)), and is automatically freed when the thread exits. No matter how many Reader Writer locks you use, you only needed one List node per thread, because a thread can only block on one Reader Writer lock at a time.

This worked out really well. All of the storage management of the wait list was completely under the hood and handled automatically. The application didn't have to worry about sizing anything. And the wait list offered some additional useful capabilities.

It greatly simplified the implementation of timeouts, where a thread could get an error return from its Reader Writer request if it could not be serviced within a specified time interval. This was especially useful in some niche applications in which a timeout of zero is used to poll for resource availability.

It also made it easier to implement priority, or expedited, requests, in which the blocking thread is pushed onto the front of the wait list instead of queued at its end. This allows applications to, for example, give a specific writer priority over readers and other writers. This is useful for real-time events, like the loss of a socket connection, to cancel pending transactions and possibly take corrective action ahead of waiting readers and writers.

But what I really liked about this refactoring of my Reader Writer solution is that it reminded me of how much I like using Diminuto's implementation of Lists. Because it's an implementation that doesn't use null pointers.

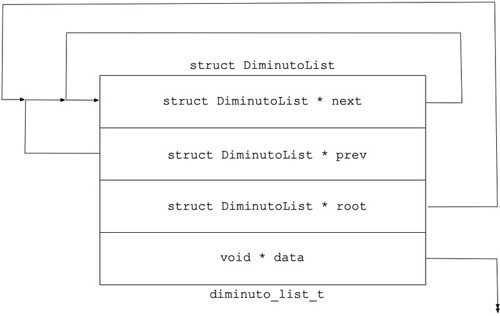

A Diminuto List node consists of four pointer fields: next, prev, root (all of which point to a node) and data (a generic void pointer). (As usual, you can click on an image to see a larger version.)

The next and prev field are obvious: they are the pointers that actually implement the doubly-linked-List. One of the advantages of a doubly-linked-list is that a node can be removed from a List by starting with just a pointer to the node; no other information is necessary to link the prev node with the next node and making the List whole again. Similarly, you can insert a new node into a List using just a pointer to the new node and a pointer a node that is already on the List; the new node can be easily added before or after the existing node.

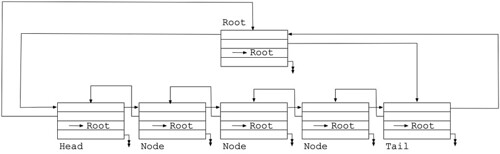

The root field points to the root of the list; this allows the application to identify what List any node is on. When a new node is inserted onto a List, it automatically inherits the root of the node that is already on the list.

Here's the interesting part: when a node is initialized, its next, prev, and root pointers are all set to point to itself. No null pointers (except perhaps for the data pointer; we'll get to that in a bit). All List nodes start out life as a List of one node, serving as their own root.

The root of the list - the node that is used to represent the List itself - starts out life like this: with its root pointer pointing to itself. As new nodes are queued to the end of the List or pushed onto the front of the List, they inherit the root pointer of the root node. Traverse the list in either direction and you come back to the root node. You know it is the root node, because its root pointer points to itself. You know if a List is empty if the next and prev pointers of the root node point to itself. This also makes is trivial to access the head or the tail of a List.

Thus, the Diminuto List isn't just a doubly-linked-list, it is a circular-doubly-linked-list.

There is a simple iterative mechanism in the List API to re-root a list so that some other node represents the List. This works because there is no difference between the root node and any other node on the List.

Except - maybe - the data pointer.

The data pointer is for whatever the application wants to use it for. None of the List implementation implicitly reads or modifies the data field of a node. The List node initialization function doesn't touch the data pointer. Typical applications using a Diminuto List use the data pointer to contain application data (or a pointer to such), or a pointer to the structure in which the List node structure itself is embedded.

The Reader Writer object uses the latter technique: it has embedded in it a List node structure used as the root of the wait list, and the data pointer in that single List node is set to point to the Reader Writer object itself. This allows any thread on the wait list to trivially access the Reader Writer object on which it is waiting by [1] accessing the pointer to its own List node in its thread-local storage, [2] going through the root pointer of that node to the root node, and finally [3] going through the data pointer of the root node.

Meanwhile, the data pointer in every individual thread's List node in its thread-local storage is used to store an enumerated value (not a pointer) used by the algorithm to indicate the state of the thread in regards to the Reader Writer object: waiting reader, waiting writer, pending reader, pending writer, running, etc.

The replacement of the ring buffer with the wait list in the Reader Writer object was straightforward. I wish I had been brave enough to use that approach when I first implemented it. I wrote Diminuto List in C, but I don't see any reason why it couldn't be written in any other language that has pointer references.

No comments:

Post a Comment